OCA Breakfast at RSA 2023

May 22, 2023

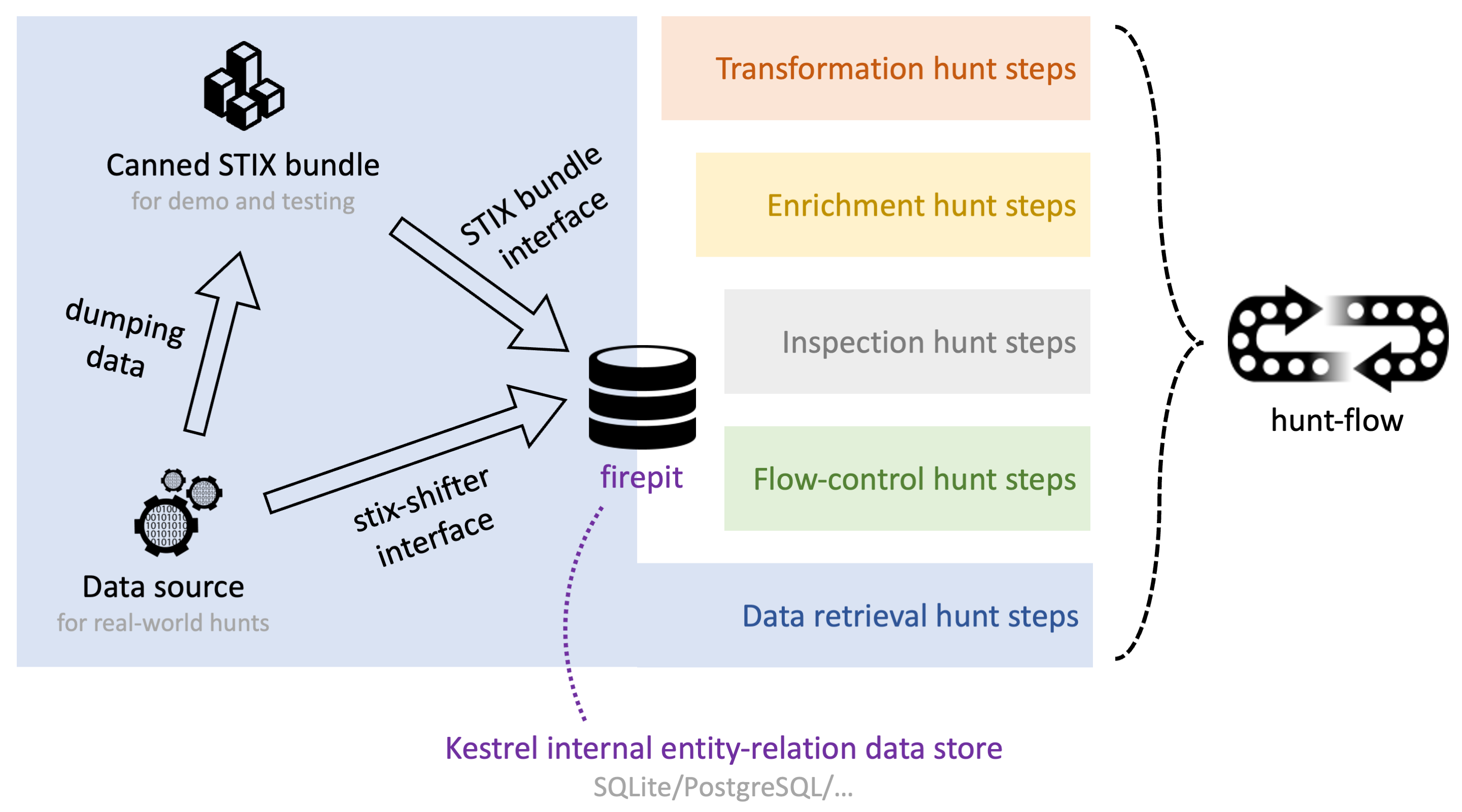

Kestrel Data Retrieval Explained

July 11, 2023Federated search is a multi-stage pipeline between cyber-security applications like Kestrel and data sources such as Elasticsearch or Crowdstrike. End-to-end testing of the entire pipeline is an important task for software quality control and is the foundation for developing scalability tests.

Today, we are happy to announce the Federated Search End-To-End Testing GitHub Repository and its first CI/CD usage for Kestrel, a federated search application. The repository establishes a framework for adding, scheduling, and running end-to-end tests upon the STIX-Shifter federated search engine, either locally or in the cloud as GitHub Actions. The blog will briefly describe the main features of the framework and provide instructions on how to use it and where to add new data sources and new tests.

What Can It Do?

End-to-end tests mimic end users using the application. Therefore, the tests capture the interactions between all the application components. When one component has a new major release, testing ensures that it will not break or interfere with the application functionality. One example use case involves testing the upgrade of the STIX-Shifter package used in Kestrel from version 4 to version 5. Testing ensures that all of the interactions between STIX-Shifter and Kestrel are verified that their behavior remains the same after the upgrade. The framework can also support development of more complex test workflows, such as application performance testing, or the development of a quality control matrix for a category of components (e.g. the STIX-Shifter connectors).

Another benefit of testing is the continuous checking of the application build and deployment processes. These features are used each time a testing workflow brings up a new test environment. Rebuilding the test environment from scratch captures any new issues that can arise during the build process. Most of these issues can be triggered by external events, like a new release of a python package. The Continuous Integration/Continuous Delivery capabilities of the test environment can be used for development as well. A test environment can also serve as a sandbox to experiment during the creation of new features or analytics.

A Framework for End-To-End Testing

The scripts in this repository are building blocks for test workflow. This repository has two main folders . The federated-search-core folder contains the scripts to build STIX-Shifter from code, and to manage data sources (EDRs, SIEMs, log management systems, security data lakes, etc). The application-test folder contains the scripts to build the applications (that use federated search) from code, configure them and run the application end-to-end tests. The testing framework can run locally using make or remotely, using the GitHub Actions workflows defined in the .github/workflows folder.

Each data source has a setup folder containing scripts to configure and set it up, import data and cleanup. Data source instances can be Docker containers, or connections to an external server. Configuration includes securing the data sources and setting up passwords. Data import ingests the data needed for testing. Users can run the data import scripts locally to ingest additional indices, in a running data source instance. Finally, cleanup scripts free all the resources allocated to the data source once testing is complete.

Every application has a setup folder containing scripts to configure and build it from code, a config, and a test folder. The config folder contains any configuration files needed by the application. The test folder contains tests which are written using the Python behave testing framework.

We have provided one scalability and two end-to-end test workflows for Kestrel that use an Elasticsearch data source. One can add new applications and data sources to the framework by generating the setup, data import scripts for data sources, the setup and testing scripts for applications, as well as the cleanup scripts for both data sources and applications. Finally, one needs to create the make targets for local testing, and the GitHub Actions workflows for remote tests.

Using the Testing Framework for an OCA Project

We have implemented two GitHub Actions workflows that perform end-to-end testing for Kestrel (an application using federated search). The first testing workflow builds Kestrel from source code and installs STIX-Shifter as a package, while the second testing workflow installs both Kestrel and STIX-Shifter from source code. Each testing workflow has three phases: setting up the test environment, running the integration tests, and releasing the resources used for tests. Next, we describe in more detail each one of these three phases.

Setting Up the End-to-end Testing Environment

The test environment setup phase has six steps: code checkout, virtual environment creation, code installation, data source setup, data import and application deployment.

Code Checkout

Code checkout clones code from three GitHub repositories, the STIX-Shifter repository, the Kestrel repository, and the Kestrel analytics repository. It can run locally using make checkout-kestrel checkout-kestrel-analytics checkout-stix-shifter. The code checkout can be customized for local runs using environment variables (e.g. $KESTREL_BRANCH, $KESTREL_ORG) that specify the organization, the repository, and the branch from where the code is cloned. When running remotely using GitHub Actions these variables are defined in the run env.

Virtual Environment Creation

The second step creates a python virtual environment where the testing workflow executes. It runs locally using the make venv command.

Code Installation

The third step retrieves all the prerequisite packages and builds Kestrel and/or STIX-Shifter from the source. The make install-kestrel install-kestrel-analyrics install-stix-shifter command invokes this script locally.

Elasticsearch Data Source Setup

Currently, integration testing only supports a single live data source – Elasticsearch. We use Docker to deploy Elasticsearch. To bring up the Elastic data source, the script first downloads the Elasticsearch Docker image. Next, it configures, launches and secures the Elasticsearch instance. Finally, it resets the Elasticsearch password and saves it for basic authentication. This step can run locally using the make install-elastic command.

Data Import

Data import downloads three Elasticsearch indices from the Kestrel Data Bucket repository. After processing the indices, the script uploads them to the Elasticsearch instance using ElasticDump. The processing changes, in the index mapping files, the value of the process/command_line/ignore_above parameter from 256 (default) to 1024. This allows Elasticsearch to index all the command_line attributes of the process entities including those that are longer than 256 characters. This step can run locally using the make import-data-elastic command.

Application Deployment

In the final step, a script injects the Elasticsearch password into the Kestrel and STIX-Shifter configuration files. Then, it tests the environment setup end-to-end. The test runs a Kestrel hunt book that retrieves and analyzes data from the Elasticsearch instance. This step can run locally using the make deploy-kestrel command.

Running the End-to-end Tests

We have derived the end-to-end tests for Kestrel from three Notebooks presented at Black Hat 22. The tests check the ability of a threat hunter (Kestrel end user) to accurately start hunting from TTPs, conduct a cross-host campaign discovery, and apply analytics in a hunt. Tests can run locally using the make test-kestrel-elastic command.

Cleanup

Cleanup frees the resources used by the tests and removes any files on the testing machine. It begins by removing the Docker container running the Elasticsearch instance. Next, it removes the analytics containers spawned during testing. It then removes the Kestrel analytics Docker images spawned during testing. Finally, it removes the entire ${HOME}/huntingtest directory, including the cloned GitHub source code, the data files downloaded or extracted from archives, and the python virtual environment where testing took place. Cleanup can run locally using the make clean-all command.

Triggering Test Workflows Remotely

The GitHub Actions workflows defined in our testing repository can be triggered remotely from other repositories. For example, the Kestrel end-to-end testing flow can be triggered remotely from the Kestrel repository using the Kestrel integration testing workflow. This remote activation capability allows the application developers to invoke end-to-end testing manually, or add it to the list of automated checks to perform when code is pushed to a main project branch, or a pull request is open.

Make Contributions to this Repository

We welcome contributions to this repository. New data sources, test cases, or data are examples of contributions that will make an immediate positive impact. Or, if you have another idea, please open an issue here!

Future Work

Going forward, we plan to add more capabilities to this repository. We want to provide secret ingestion capabilities that would allow connecting to external, existing repositories during testing. We want to implement performance testing and to provide generic STIX-Shifter tests across data sources as an item towards the bigger picture of STIX-Shifter connector quality control.